The Voice-Powered Web: combining browsers with AI voice assistants

What if you could interact with the Web via voice assistants like Alexa, Bixby, Cortana, Google Assistant and Siri?

“Go to mozillafestival.org. What are the navigation items? … Go to ‘Schedule’.”

Imagine voice was a first-class input device on the Web.

Imagine your favourite voice assistant could be your navigator as you interact on the Web, reading pages to you and filling out forms for you.

And imagine a web page could advertise its own voice service to your browser, allowing you to interact with it in a natural way.

This was the topic of our recent “AI Voice Assistants & The Web” session at Mozilla Festival in London, in collaboration with patrick h. lauke from The Paciello Group and Michael Henretty from Mozilla’s Common Voice Project.

Our “AI Voice Assistants & The Web” session at Mozilla Festival 2017. Inset: the world’s worst “The Voice” fan art?

Voice assistants are on the rise…

Big tech companies like Samsung (my employer), Apple, Amazon, Google and Microsoft are all investing heavily into their voice assistants. They are set to become even more ubiquitous in our homes and other environments over the next few years — see Project Ambience for example.

…and they are already talking to web browsers

Voice assistants are already starting to gain the ability to control our web browsers. Here’s an example I recorded to demonstrate Samsung’s Bixby:

What if we could go a step further and be able to interact with the web pages themselves via voice too?

The Web has had tools for voice output for a long time: screen readers. Patrick began the session by sharing how screen readers are usually used via a keyboard and mouse. They can be good at outputting what’s on the screen (plus programmatic properties), but they’re essentially bound by outputting what’s visually there. Correctly coded web content can be read, understood and operated with screen readers. On complex pages though, it can be tedious.

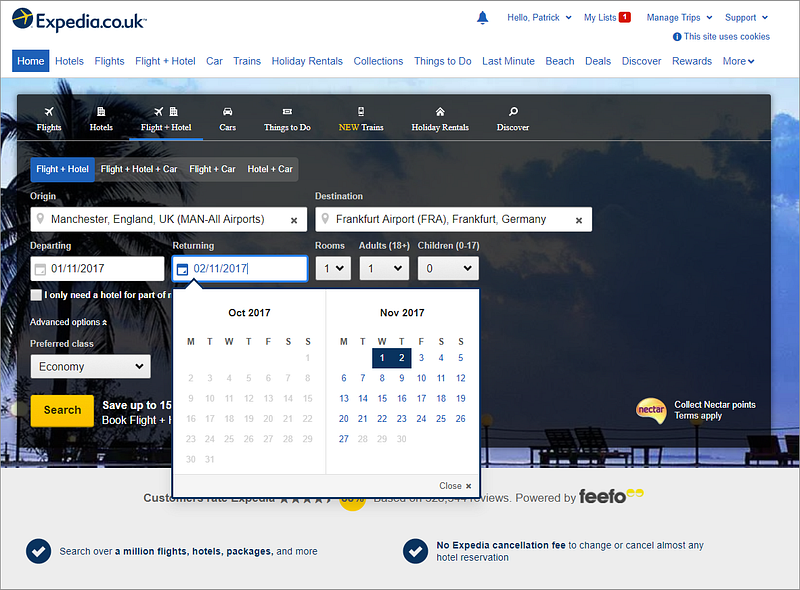

What if we could use our voice assistants for voice input on the Web too? Patrick shared an example of searching for flights. Imagine if — instead of navigating a form like this (especially via a screen reader) — we could just say: “find flights from Manchester to Frankfurt for Wednesday next week, coming back the following day”.

Expedia.co.uk — an example of a complex input form — could it be easier to control via voice?

Could our new wave of ‘AI’ voice assistants perform the functions of screen readers, but in a smarter way? Could they be useful for web users without the ability to use a touchscreen/keyboard/mouse, or those who struggle to use them for longer periods due to conditions such as RSI?

Open voice services

Michael then shared how Mozilla are working on foundations for open, public voice services. Training a voice assistant takes a lot of data though: 10,000 hours of recordings. To put that in context, the total of all of the TED talks out there comes to about 100 hours: still 2 orders of magnitude away! That’s why Mozilla have opened up Project Common Voice to allow the public to lend their own voices. They created an experiment called Voice Fill to allow you to search via voice on Google, Yahoo and DuckDuckGo. And they have been starting to explore the idea of open voice service registration under a preliminary name of Voice HTML.

Use cases

We then asked our participants for help in a brief design thinking session. What use cases are there for voice interaction on the Web? After brainstorming, we asked them to select a particular scenario and sketch it out in groups.

We had some great, thought-provoking ideas, including:

- Voice ID: could smart voice assistants help us to do away with passwords? They could potentially recognise our voice and authenticate us directly. We are already seeing telephone banking start to incorporate this, so perhaps we can incorporate it into the Voice-Powered Web too? Could it work well with Web Authn?

- Content filtering: instead of simply reading some web-based content to you verbatim, a smart assistant could adapt it for you, if you wish. For example, it could omit things that you’ve asked it to filter out, or replace offensive words with milder ones.

- Going hands-free: a number of groups discussed useful hands-free situations such as cooking, practising first aid and translating your speech when you’re abroad. What if we could power these kind of voice interactions using the Web?

- Empathy: smart voice assistants could detect your mood and use that to automatically adapt their tone and their suggestions.

Sorry if I missed anything out: if you attended the session and you would like me to add, edit or add a credit, please just leave a comment :-)

Next steps

We think that there is a massive potential for the Voice-Powered Web — and we think it’s time to start discussing together how the Web can facilitate these kind of interactions in an open, secure and privacy-respecting way.

You can check out our slides from the session here. If you’re interested in this topic and you would like to participate in further conversations and potential future web standards work, please join the WICG discussion here, send us an email, or leave a comment below.

Tagged in Artificial Intelligence, Voice Assistant, Web, Browsers